Agenda

📚 Distributed multi-xPU computing, MPI

💻 Running multi-GPU applications on supercomputers

🚧 Exercises:

Fake-parallelisation, Julia MPI

Using

ImplicitGlobalGrid.jlMulti-xPU diffusion 2D

Distributed computing

Fake parallelisation

Julia MPI (CPU + GPU)

Using ParallelStencil.jl together with ImplicitGlobalGrid.jl

If this is the case or not - hold-on, we certainly have some good stuff for everyone

Adds one additional layer of parallelisation:

Global problem does no longer "fit" within a single compute node (or GPU)

Local resources (mainly memory) are finite, e.g.,

CPUs: increase the number of cores beyond what a single CPU can offer

GPUs: overcome the device memory limitation

Simply said:

If one CPU or GPU is not sufficient to solve a problem, then use more than one and solve a subset of the global problem on each.

Distributed (memory) computing permits to take advantage of computing "clusters", many similar compute nodes interconnected by high-throughput network. That's also what supercomputers are.

So here we go. Let's assume we want to solve a certain problem, which we will call the "global problem". This global problem, we split then into several local problems that execute concurrently.

Two scaling approaches exist:

strong scaling

weak scaling

Increasing the amount of computing resources to resolve the same global problem would increase parallelism and may result in faster execution (wall-time). This parallelisation is called strong scaling; the resources are increased but the global problem size does not change, resulting in an increase in the number of (smaller) local problems that can be solved in parallel.

The strong scaling approach is often used when parallelising legacy CPU codes, as increasing the number of parallel local problems can lead to some speed-up, reaching an optimum beyond which additional local processes is no longer be beneficial.

However, we won't follow that path when developing parallel multi-GPU applications from scratch. Why?

Because GPUs' performance is very sensitive to the local problem size as we experienced when trying to tune the kernel launch parameters (threads, blocks, i.e., the local problem size).

When developing multi-GPU applications from scratch, it is likely more suitably to approach distributed parallelisation from a weak scaling perspective; defining first the optimal local problem size to resolve on a single GPU and then increasing the number of optimal local problems (and the number of GPUs) until reaching the global problem one originally wants to solve.

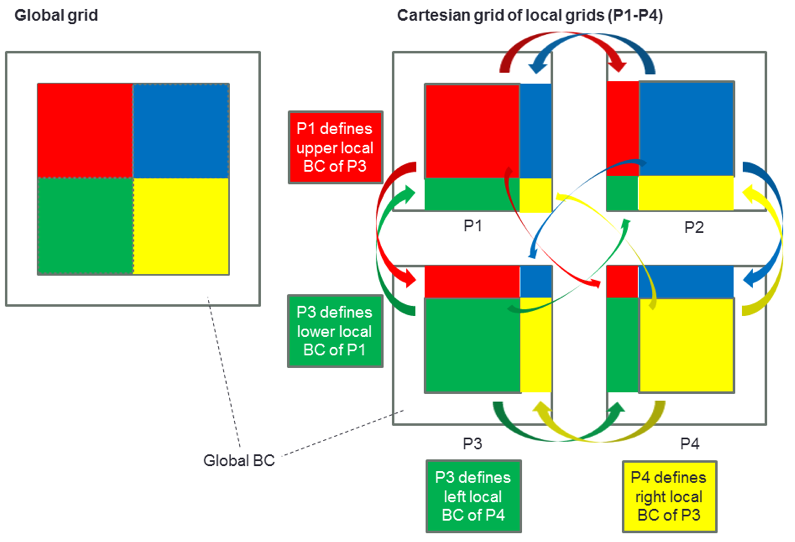

We can thus replicate a local problem multiple times in each dimension of the Cartesian space to obtain a global grid, which is therefore defined implicitly. Local problems define each others local boundary conditions by exchanging internal boundary values using intra-node communication (e.g., message passing interface - MPI), as depicted on the figure hereafter:

Many things could potentially go wrong in distributed computing. However, the ultimate goal (at least for us) is to keep up with parallel efficiency.

The parallel efficiency defines as the speed-up divided by the number of processors. The speed-up defines as the execution time using an increasing number of processors normalised by the single processor execution time. We will use the parallel efficiency in a weak scaling configuration.

Ideally, the parallel efficiency should stay close to 1 while increasing the number of computing resources proportionally with the global problem size (i.e. keeping the constant local problem sizes), meaning no time is lost (no overhead) in due to, e.g., inter-process communication, network congestion, congestion of shared filesystem, etc... as shown in the figure hereafter:

we will explore distributed computing with Julia's MPI wrapper MPI.jl. This will enable our codes to run on multiple CPUs and GPUs in order to scale on modern multi-CPU/GPU nodes, clusters and supercomputers. In the proposed approach, each MPI process handles one CPU or GPU.

We're going to work out the following steps to tackle distributed parallelisation in this lecture (in 5 steps):

As a first step, we will look at the below 1-D diffusion code which solves the linear diffusion equations using a "fake-parallelisation" approach. We split the calculation on two distinct left and right domains, which requires left and right C arrays, CL and CR, respectively.

In this "fake parallelisation" code, the computations for the left and right domain are performed sequentially on one process, but they could be computed on two distinct processes if the needed boundary update (often referred to as halo update in literature) was done with MPI.

The idea of this fake parallelisation approach is the following:

# Compute physics locally

CL[2:end-1] .= CL[2:end-1] .+ dt*D*diff(diff(CL)/dx)/dx

CR[2:end-1] .= CR[2:end-1] .+ dt*D*diff(diff(CR)/dx)/dx

# Update boundaries (MPI)

CL[end] = CR[2]

CR[1] = CL[end-1]

# Global picture

C .= [CL[1:end-1]; CR[2:end]]We see that a correct boundary update will be the critical part for a successful implementation. In our approach, we need an overlap of 2 cells between CL and CR in order to avoid any wrong computations at the transition between the left and right domains.

Run the "fake parallelisation" 1-D diffusion code l8_diffusion_1D_2procs.jl, which is missing the boundary updates of the 2 fake processes and describe what you see in the visualisation.

Then, add the required boundary update:

# Update boundaries (MPI)

CL[end] = CR[2]

CR[1] = CL[end-1]in order make the code work properly and run it again. Note what has changed in the visualisation.

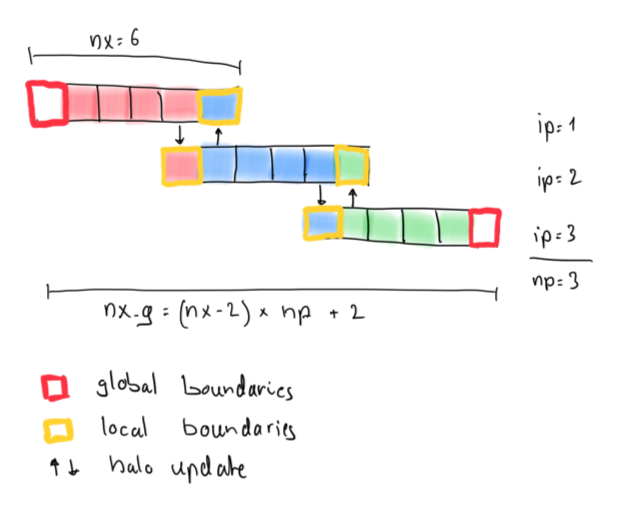

The next step will be to generalise the fake parallelisation with 2 fake processes to work with n fake processes. The idea of this generalised fake parallelisation approach is the following:

for ip = 1:np # compute physics locally

C[2:end-1,ip] .= C[2:end-1,ip] .+ dt*D*diff(diff(C[:,ip])/dxg)/dxg

end

for ip = 1:np-1 # update boundaries

C[end,ip ] = C[ 2,ip+1]

C[ 1,ip+1] = C[end-1,ip ]

end

for ip = 1:np # global picture

i1 = 1 + (ip-1)*(nx-2)

Cg[i1:i1+nx-2] .= C[1:end-1,ip]

endThe array C contains now n local domains where each domain belongs to one fake process, namely the fake process indicated by the second index of C (ip). The boundary updates are to be adapted accordingly. All the physical calculations happen on the local chunks of the arrays.

We only need "global" knowledge in the definition of the initial condition.

The previous simple initial conditions can be easily defined without computing any Cartesian coordinates. To define other initial conditions we often need to compute global coordinates.

In the code below, which serves to define a Gaussian anomaly in the centre of the domain, Cartesian coordinates can be computed for each cell based on the process ID (ip), the cell ID (ix), the array size (nx), the overlap of the local domains (2) and the grid spacing of the global grid (dxg); moreover, the origin of the coordinate system can be moved to any position using the global domain length (lx):

# Initial condition

for ip = 1:np

for ix = 1:nx

x[ix,ip] = ( (ip-1)*(nx-2) + (ix-0.5) )*dxg - 0.5*lx

C[ix,ip] = exp(-x[ix,ip]^2)

end

i1 = 1 + (ip-1)*(nx-2)

xt[i1:i1+nx-2] .= x[1:end-1,ip]; if (ip==np) xt[i1+nx-1] = x[end,ip] end

Ct[i1:i1+nx-2] .= C[1:end-1,ip]; if (ip==np) Ct[i1+nx-1] = C[end,ip] end

endn fake processes)Modify the initial condition in the 1-D diffusion code l8_diffusion_1D_nprocs.jl to a centred Gaussian anomaly.

Then run this code which is missing the boundary updates of the n fake processes and describe what you see in the visualisation. Then, add the required boundary update in order make the code work properly and run it again. Note what has changed in the visualisation.

We are now ready to write a code that will truly distribute calculations on different processors using MPI.jl for inter-process communication.

Let us see what are the somewhat minimal requirements that will allow us to write a distributed code in Julia using MPI.jl. We will solve the following linear diffusion physics (see l8_diffusion_1D_mpi.jl):

for it = 1:nt

qx .= .-D*diff(C)/dx

C[2:end-1] .= C[2:end-1] .- dt*diff(qx)/dx

endTo enable distributed parallelisation, we will do the following steps:

Initialise MPI and set up a Cartesian communicator

Implement a boundary exchange routine

Create a "global" initial condition

Finalise MPI

To (1.) initialise MPI and prepare the Cartesian communicator, we do (upon import MPI):

import MPI

MPI.Init()

dims = [0]

comm = MPI.COMM_WORLD

nprocs = MPI.Comm_size(comm)

MPI.Dims_create!(nprocs, dims)

comm_cart = MPI.Cart_create(comm, dims, [0], 1)

me = MPI.Comm_rank(comm_cart)

coords = MPI.Cart_coords(comm_cart)

neighbors_x = MPI.Cart_shift(comm_cart, 0, 1)where me represents the process ID unique to each MPI process (the analogue to ip in the fake parallelisation).

Then, we need to (2.) implement a boundary update routine, which can have the following structure:

@views function update_halo!(A, neighbors_x, comm)

# Send to / receive from neighbour 1 ("left neighbor")

if neighbors_x[1] != MPI.PROC_NULL

sendbuf = A[2]

recvbuf = zeros(1)

MPI.Send(sendbuf, neighbors_x[1], 0, comm)

MPI.Recv!(recvbuf, neighbors_x[1], 1, comm)

A[1] = recvbuf[1]

end

# Send to / receive from neighbour 2 ("right neighbor")

if neighbors_x[2] != MPI.PROC_NULL

sendbuf = A[end-1]

recvbuf = zeros(1)

MPI.Recv!(recvbuf, neighbors_x[2], 0, comm)

MPI.Send(sendbuf, neighbors_x[2], 1, comm)

A[end] = recvbuf[1]

end

return

endThen, we (3.) initialize C with a "global" initial Gaussian anomaly that spans correctly over all local domains. This can be achieved, e.g., as given here:

x0 = coords[1]*(nx-2)*dx

xc = [x0 + ix*dx - dx/2 - 0.5*lx for ix=1:nx]

C = exp.(.-xc.^2)where x0 represents the first global x-coordinate on every process (computed in function of coords) and xc represents the local chunk of the global coordinates on each local process (this is analogue to the initialisation in the fake parallelisation).

Last, we need to (4.) finalise MPI prior to returning from the main function:

MPI.Finalize()All the above described is found in the code l8_diffusion_1D_mpi.jl, except for the boundary updates (see 2.).

Run the code l8_diffusion_1D_mpi.jl which is still missing the boundary updates three times: with 1, 2 and 4 processes (replacing np by the number of processes):

mpiexecjl -n <np> julia --project <my_script.jl>Visualise the results after each run with the l8_vizme1D_mpi.jl code (adapt the variable nprocs!). Describe what you see in the visualisation. Then, add the required boundary update in order make the code work properly and run it again. Note what has changed in the visualisation.

sendbuffer and receive buffer, storing the right value in the send buffer; 2) use MPI.Send and MPI.Recv! to send/receive the data; 3) store the received data in the right position in the array.Congratulations! You just did a distributed memory diffusion solver in only 70 lines of code.

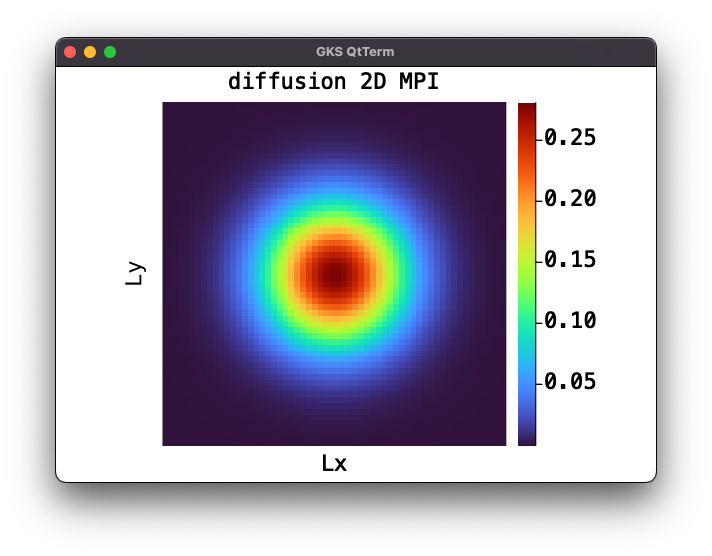

Let us now do the same in 2D: there is not much new there, but it may be interesting to work out how boundary update routines can be defined in 2D as one now needs to exchange vectors instead of single values.

👉 You'll find a working 1D script in the scripts/l8_scripts folder after the lecture.

Run the code l8_diffusion_2D_mpi.jl which is still missing the boundary updates three times: with 1, 2 and 4 processes.

Visualise the results after each run with the l8_vizme2D_mpi.jl code (adapt the variable nprocs!). Describe what you see in the visualisation. Then, add the required boundary update in order make the code work properly and run it again. Note what has changed in the visualisation.

The last step is to create a multi-GPU solver out of the above multi-CPU solver. CUDA-aware MPI is of great help in this task, because it allows to directly pass GPU arrays to the MPI functions.

Besides facilitating the programming, it can leverage Remote Direct Memory Access (RDMA) which can be of great benefit in many HPC scenarios.

Translate the code diffusion_2D_mpi.jl from Step 4 to GPU using GPU array programming. Note what changes were needed to go from CPU to GPU in this distributed solver.

Use a similar approach as implemented in the CPU code to perform the boundary updates. You can use the copyto! function in order to copy the data from the GPU memory into the send buffers (CPU memory) or to copy the receive buffer data (CPU memory) baclk to the GPU array.

l8_hello_mpi_gpu.jl code to get an idea on how to select a GPU based on node-local MPI infos.Head to the exercise section for further directions on this step which is part of this week's homework assignments.

This completes the introduction to distributed parallelisation with Julia.

Note that high-level Julia packages as for example ImplicitGlobalGrid.jl can render distributed parallelisation with GPU and CPU for HPC a very simple task. Let's check it out!

ImplicitGlobalGrid.jlLet's have look at ImplicitGlobalGrid.jl's repository.

ImplicitGlobalGrid.jl can render distributed parallelisation with GPU and CPU for HPC a very simple task. Moreover, ImplicitGlobalGrid.jl elegantly combines with ParallelStencil.jl.

Finally, the cool part: using both packages together enables to hide communication behind computation. This feature enables a parallel efficiency close to 1.

For this development, we'll start from the l8_diffusion_2D_perf_xpu.jl code.

Only a few changes are required to enable multi-xPU execution, namely:

Initialise the implicit global grid

Use global coordinates to compute the initial condition

Update halo (and overlap communication with computation)

Finalise the global grid

Tune visualisation

But before we start programming the multi-xPU implementation, let's get setup with GPU MPI on Piz Daint. Follow steps are needed:

Launch a salloc on 4 nodes

Install the required MPI-related packages

Test your setup running l8_hello_mpi.jl and l8_hello_mpi_gpu.jl scripts on 1-4 nodes

To (1.) initialise the global grid, one first needs to use the package

using ImplicitGlobalGridThen, one can add the global grid initialisation in the # Derived numerics section

me, dims = init_global_grid(nx, ny, 1) # Initialization of MPI and more...

dx, dy = Lx/nx_g(), Ly/ny_g()init_global_grid takes care of MPI GPU mapping based on node-local MPI infos. Have a look at the l8_hello_mpi_gpu.jl code to get an idea about the process.Then, for (2.), one can use x_g() and y_g() to compute the global coordinates in the initialisation (to correctly spread the Gaussian distribution over all local processes)

C = @zeros(nx,ny)

C .= Data.Array([exp(-(x_g(ix,dx,C)+dx/2 -Lx/2)^2 -(y_g(iy,dy,C)+dy/2 -Ly/2)^2) for ix=1:size(C,1), iy=1:size(C,2)])The halo update (3.) can be simply performed adding following line after the compute! kernel

update_halo!(C)Now, when running on GPUs, it is possible to hide MPi communication behind computations!

This option implements as:

@hide_communication (8, 2) begin

@parallel compute!(C2, C, D_dx, D_dy, dt, _dx, _dy, size_C1_2, size_C2_2)

C, C2 = C2, C # pointer swap

update_halo!(C)

endThe @hide_communication (8, 2) will first compute the first and last 8 and 2 grid points in x and y dimension, respectively. Then, while exchanging boundaries the rest of the local domains computations will be perform (overlapping the MPI communication).

To (4.) finalise the global grid,

finalize_global_grid()needs to be added before the return of the "main".

The last changes to take care of is to (5.) handle visualisation in an appropriate fashion. Here, several options exists.

One approach would for each local process to dump the local domain results to a file (with process ID me in the filename) in order to reconstruct to global grid with a post-processing visualisation script (as done in the previous examples). Libraries like, e.g., ADIOS2 may help out there.

Another approach would be to gather the global grid results on a master process before doing further steps as disk saving or plotting.

To implement the latter and generate a gif, one needs to define a global array for visualisation:

if do_visu

if (me==0) ENV["GKSwstype"]="nul"; if isdir("viz2D_mxpu_out")==false mkdir("viz2D_mxpu_out") end; loadpath = "./viz2D_mxpu_out/"; anim = Animation(loadpath,String[]); println("Animation directory: $(anim.dir)") end

nx_v, ny_v = (nx-2)*dims[1], (ny-2)*dims[2]

if (nx_v*ny_v*sizeof(Data.Number) > 0.8*Sys.free_memory()) error("Not enough memory for visualization.") end

C_v = zeros(nx_v, ny_v) # global array for visu

C_inn = zeros(nx-2, ny-2) # no halo local array for visu

xi_g, yi_g = LinRange(dx+dx/2, Lx-dx-dx/2, nx_v), LinRange(dy+dy/2, Ly-dy-dy/2, ny_v) # inner points only

endThen, the plotting routine can be adapted to first gather the inner points of the local domains into the global array (using gather! function) and then plot and/or save the global array (here C_v) from the master process me==0:

# Visualize

if do_visu && (it % nout == 0)

C_inn .= Array(C)[2:end-1,2:end-1]; gather!(C_inn, C_v)

if (me==0)

opts = (aspect_ratio=1, xlims=(xi_g[1], xi_g[end]), ylims=(yi_g[1], yi_g[end]), clims=(0.0, 1.0), c=:turbo, xlabel="Lx", ylabel="Ly", title="time = $(round(it*dt, sigdigits=3))")

heatmap(xi_g, yi_g, Array(C_v)'; opts...); frame(anim)

end

endTo finally generate the gif, one needs to place the following after the time loop:

if (do_visu && me==0) gif(anim, "diffusion_2D_mxpu.gif", fps = 5) endIGG_CUDAAWARE_MPI=1. Note that the examples using ImplicitGlobalGrid.jl would also work if USE_GPU = false; however, the communication and computation overlap feature is then currently not yet available as its implementation relies at present on leveraging CUDA streams.Let's recall what we learned today about distributed computing in Julia using GPUs:

We used fake parallelisation to understand the correct boundary exchange procedure.

We implemented 1D and 2D diffusion solvers in Julia using MPI for distributed memory parallelisation on both CPUs and GPUs (using blocking communication).

We combined ParallelStencil.jl with ImplicitGlobalGrid.jl to implement distributed memory parallelisation on multiple CPU and GPUs.

lecture8 folder (and not in the PorousConvection folder). The git commit hash (or SHA) of the final push needs to be uploaded on Moodle (more).👉 See Logistics for submission details.

The goal of this exercise is to:

Familiarise with distributed computing

Learn about MPI on the way

In this exercise, you will:

Finalise the fake parallelisation scripts discussed in lecture 8 (2 procs and n procs)

Finalise the 2D Julia MPI script

Create a Julia MPI GPU version of the 2D Julia MPI script discussed here

Create a new lectrue_8 folder for this first exercise in your shared private GitHub repository for this week's exercises.

Finalise the l8_diffusion_1D_2procs.jl and l8_diffusion_1D_nprocs.jl scripts discussed during lecture 8. Make sure to correctly implement the halo update in order to exchange the internal boundaries among the fake parallel processes (left and right and ip in the "2procs" and "nprocs" codes, respectively). See here for details.

Report in two separate figures the final distribution of concentration C for both fake parallel codes. Include these figure in a first section of your lecture's 8 README.md adding a description sentence to each.

Finalise the l8_diffusion_2D_mpi.jl script discussed during lecture 8. In particular, finalise the update_halo functions to allow for correct internal boundary exchange among the distributed parallel MPI processes. Add the final code to your GitHub lecture 8 folder.

For each of the (4) neighbour exchanges:

Start by defining a sendbuffer sendbuf to hold the vector you need to send

Initialise a receive buffer recvbuf to later hold the vector received from the corresponding neighbouring process

Use MPI.Send and MPI.Recv! functions to perform the boundary exchange

Assign the values within the receive buffer to the corresponding row or column of the array A

In a new section of your lecture's 8 README.md, add a .gif animation showing the diffusion of the quantity C, running on 4 MPI processes, for the physical and numerical parameters suggested in the initial file. Add a short description of the results and provide the command used to launch the script in the README.md as well.

Create a multi-GPU implementation of the l8_diffusion_2D_mpi.jl script as suggested here. To this end, create a new script l8_diffusion_2D_mpi_gpu.jl that you will upload to your lecture 8 GitHub repository upon completion.

Translate the l8_diffusion_2D_mpi.jl code from exercise 1 (task 3) to GPU using GPU array programming. You can use a similar approach as in the CPU code to perform the boundary updates. You should use copyto! function in order to copy the data from the GPU memory into the send buffers (CPU memory) or to copy the receive buffer data to the GPU array.

The steps to realise this task summarise as following:

Select the GPU based on node-local MPI infos (see the l8_hello_mpi_gpu.jl code to get started.)

Use GPU array initialisation (CUDA.zeros, CuArray(), ...)

Gather the GPU arrays back on the host memory for visualisation or saving (using Array())

Modify the update_halo function; use copyto! to copy device data to the host into the send buffer or to copy host data to the device from the receive buffer

In a new (3rd) section of your lecture's 8 README.md, add .gif animation showing the diffusion of the quantity C, running on 4 GPUs (MPI processes), for the physical and numerical parameters suggested in the initial file. Add a short description of the results and provide the command used to launch the script in the README.md as well. Note what changes were needed to go from CPU to GPU in this distributed solver.

👉 See Logistics for submission details.

The goal of this exercise is to:

Familiarise with distributed computing

Combine ImplicitGlobalGrid.jl and ParallelStencil.jl

Learn about GPU MPI on the way

In this exercise, you will:

Create a multi-xPU version of your the 2D xPU diffusion solver

Keep it xPU compatible using ParallelStencil.jl

Deploy it on multiple xPUs using ImplicitGlobalGrid.jl

Start by fetching the l8_diffusion_2D_perf_xpu.jl code from the scripts/l8_scripts folder and copy it to your lectrue_8 folder.

Make a copy and rename it diffusion_2D_perf_multixpu.jl.

Follow the steps listed in the section from lecture 8 about using ImplicitGlobalGrid.jl to add multi-xPU support to the 2D diffusion code.

The 5 steps you'll need to implement are summarised hereafter:

Initialise the implicit global grid

Use global coordinates to compute the initial condition

Update halo (and overlap communication with computation)

Finalise the global grid

Tune visualisation

Once the above steps are implemented, head to Piz Daint and configure either an salloc or prepare a sbatch script to access 4 nodes.

Run the single xPU l8_diffusion_2D_perf_xpu.jl code on a single CPU and single GPU (changing the USE_GPU flag accordingly) for following parameters

# Physics

Lx, Ly = 10.0, 10.0

D = 1.0

ttot = 1.0

# Numerics

nx, ny = 126, 126

nout = 20and save output C data. Confirm that the difference between CPU and GPU implementation is negligible, reporting it in a new section of the README.md for this exercise 2 within the lecture_8 folder in your shared private GitHub repo.

Then run the newly created diffusion_2D_perf_multixpu.jl script with following parameters on 4 MPI processes having set USE_GPU = true:

# Physics

Lx, Ly = 10.0, 10.0

D = 1.0

ttot = 1e0

# Numerics

nx, ny = 64, 64 # number of grid points

nout = 20

# Derived numerics

me, dims = init_global_grid(nx, ny, 1) # Initialization of MPI and more...Save the global C_v output array. Ensure its size matches the inner points of the single xPU produced output (C[2:end-1,2:end-1]) and then compare the results to the existing 2 outputs produced in Task 2

Now that we are confident the xPU and multi-xPU codes produce correct physical output, we will asses performance.

Use the code diffusion_2D_perf_multixpu.jl and make sure to deactivate visualisation, saving or any other operation that would save to disk or slow the code down.

Strong scaling: Using a single GPU, gather the effective memory throughput T_eff varying nx, ny as following

nx = ny = 16 * 2 .^ (1:10)ttot or nt accordingly.In a new figure you'll add to the README.md, report T_eff as function of nx, and include a short comment on what you see.

Weak scaling: Select the smallest nx,ny values from previous step (2.) for which you've gotten the best T_eff. Run now the same code using this optimal local resolution varying the number of MPI process as following np = 1,4,16,25,64.

In a new figure, report the execution time for the various runs normalising them with the execution time of the single process run. Comment in one sentence on what you see.

Finally, let's assess the impact of hiding communication behind computation achieved using the @hide_communication macro in the multi-xPU code.

Using the 64 MPI processes configuration, run the multi-xPU code changing the values of the tuple after @hide_communication such that

@hide_communication (2,2)

@hide_communication (16,4)

@hide_communication (16,16)Then, you should also run once the code commenting both @hide_communication and corresponding end statements. On a figure report the execution time as function of [no-hidecomm, (2,2), (8,2), (16,4), (16,16)] (note that the (8,2) case you should have from Task 4 and/or 5) making sure to normalise it by the single process execution time (from Task 5). Add a short comment related to your results.