Agenda

📚 Distributed multi-xPU computing with ImplicitGlobalGrid.jl

💻 Documenting your code

🚧 Exercises:

2D diffusion with multi-xPU

Distributed computing

learn about hiding MPI communication behind computations using asynchronous MPI calls

combine ImplicitGlobalGrid.jl and ParallelStencil.jl together

Let's have look at ImplicitGlobalGrid.jl's repository.

ImplicitGlobalGrid.jl can render distributed parallelisation with GPU and CPU for HPC a very simple task. Moreover, ImplicitGlobalGrid.jl elegantly combines with ParallelStencil.jl.

Finally, the cool part: using both packages together enables to hide communication behind computation. This feature enables a parallel efficiency close to 1.

For this development, we'll start from the l9_diffusion_2D_perf_xpu.jl code.

Only a few changes are required to enable multi-xPU execution, namely:

Initialise the implicit global grid

Use global coordinates to compute the initial condition

Update halo (and overlap communication with computation)

Finalise the global grid

Tune visualisation

But before we start programming the multi-xPU implementation, let's get setup with GPU MPI on daint.alps. Follow steps are needed:

Launch a salloc on 2 nodes

Install the required MPI-related packages

Test your setup running l9_hello_mpi.jl and l9_hello_mpi_gpu.jl scripts on 1-2 nodes

To (1.) initialise the global grid, one first needs to use the package

using ImplicitGlobalGridThen, one can add the global grid initialisation in the # Derived numerics section

me, dims = init_global_grid(nx, ny, 1; select_device = false) # Initialization of MPI and more...

dx, dy = Lx/nx_g(), Ly/ny_g()init_global_grid(...; select_device = false).Then, for (2.), one can use x_g() and y_g() to compute the global coordinates in the initialisation (to correctly spread the Gaussian distribution over all local processes)

C = @zeros(nx,ny)

C .= Data.Array([exp(-(x_g(ix,dx,C)+dx/2 -Lx/2)^2 -(y_g(iy,dy,C)+dy/2 -Ly/2)^2) for ix=1:size(C,1), iy=1:size(C,2)])The halo update (3.) can be simply performed adding following line after the compute! kernel

update_halo!(C)Now, when running on GPUs, it is possible to hide MPI communication behind computations!

This option implements as:

@hide_communication (8, 2) begin

@parallel compute!(C2, C, D_dx, D_dy, dt, _dx, _dy, size_C1_2, size_C2_2)

C, C2 = C2, C # pointer swap

update_halo!(C)

endThe @hide_communication (8, 2) will first compute the first and last 8 and 2 grid points in x and y dimension, respectively. Then, while exchanging boundaries the rest of the local domains computations will be perform (overlapping the MPI communication).

To (4.) finalise the global grid,

finalize_global_grid()needs to be added before the return of the "main".

The last changes to take care of is to (5.) handle visualisation in an appropriate fashion. Here, several options exists.

One approach would for each local process to dump the local domain results to a file (with process ID me in the filename) in order to reconstruct to global grid with a post-processing visualisation script (as done in the previous examples). Libraries like, e.g., ADIOS2 may help out there.

Another approach would be to gather the global grid results on a master process before doing further steps as disk saving or plotting.

To implement the latter and generate a gif, one needs to define a global array for visualisation:

if do_visu

if (me==0) ENV["GKSwstype"]="nul"; if isdir("viz2D_mxpu_out")==false mkdir("viz2D_mxpu_out") end; loadpath = "./viz2D_mxpu_out/"; anim = Animation(loadpath,String[]); println("Animation directory: $(anim.dir)") end

nx_v, ny_v = (nx-2)*dims[1], (ny-2)*dims[2]

if (nx_v*ny_v*sizeof(Data.Number) > 0.8*Sys.free_memory()) error("Not enough memory for visualization.") end

C_v = zeros(nx_v, ny_v) # global array for visu

C_inn = zeros(nx-2, ny-2) # no halo local array for visu

xi_g, yi_g = LinRange(dx+dx/2, Lx-dx-dx/2, nx_v), LinRange(dy+dy/2, Ly-dy-dy/2, ny_v) # inner points only

endThen, the plotting routine can be adapted to first gather the inner points of the local domains into the global array (using gather! function) and then plot and/or save the global array (here C_v) from the master process me==0:

# Visualize

if do_visu && (it % nout == 0)

C_inn .= Array(C)[2:end-1,2:end-1]; gather!(C_inn, C_v)

if (me==0)

opts = (aspect_ratio=1, xlims=(xi_g[1], xi_g[end]), ylims=(yi_g[1], yi_g[end]), clims=(0.0, 1.0), c=:turbo, xlabel="Lx", ylabel="Ly", title="time = $(round(it*dt, sigdigits=3))")

heatmap(xi_g, yi_g, Array(C_v)'; opts...); frame(anim)

end

endTo finally generate the gif, one needs to place the following after the time loop:

if (do_visu && me==0) gif(anim, "diffusion_2D_mxpu.gif", fps = 5) endIGG_CUDAAWARE_MPI=1. Note that the examples using ImplicitGlobalGrid.jl would also work if USE_GPU = false; however, the communication and computation overlap feature is then currently not yet available as its implementation relies at present on leveraging GPU streams.Hiding communication behind computation is a common optimisation technique in distributed stencil computing.

You can think about it as each MPI rank being a watermelon.

We first want to compute the updates for the crust region (green), and then directly start the MPI non-blocking communication (Isend/Irecv).

In the meantime, we asychronously compute the updates of the inner region (red) of the watermelon.

The aim is to hide step (1.) while computing step (2.).

We can examine the effect of hiding communication looking at the profiler trace produced running a 3D diffusion code under NVIDIA Nsight System profiler (see the Profiling on Alps section about how to launch the profiler).

Profiling trace with hide communication enabled:

Profiling trace with naive implementation and hide communication disabled:

This lecture we will learn:

documentation vs code-comments

why to write documentation

GitHub tools:

rendering of markdown files

gh-pages

some Julia tools:

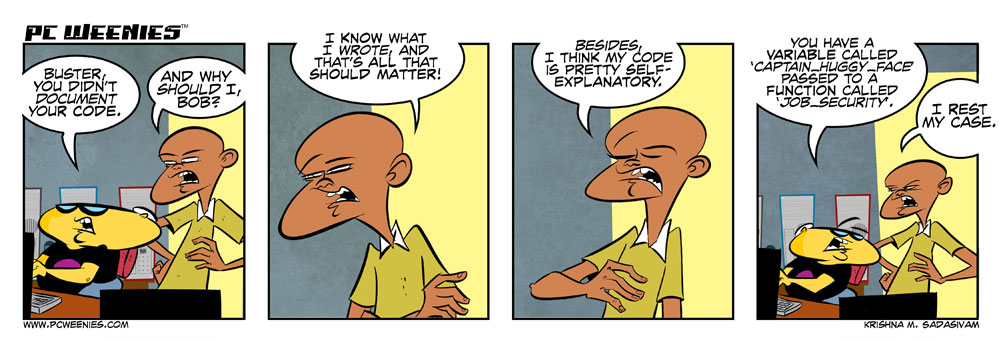

Why should I write code comments?

"Code Tells You How, Comments Tell You Why"

code should be made understandable by itself, as much as possible

comments then should be to tell the "why" you're doing something

but I do a lot of structuring comments as well

math-y variables tend to be short and need a comment as well

Why should I write documentation?

documentation should give a bigger overview of what your code does

at the function-level (doc-strings)

at the package-level (README, full-fledged documentation)

to let other people and your future self (probably most importantly) understand what your code is about

Worse than no documentation/code comments is documentation which is outdated.

I find the best way to keep documentation up to date is:

have documentation visible to you, e.g. GitHub README

document what you need yourself

use examples and run them as part of CI (doc-tests, example-scripts)

A Julia doc-string (Julia manual):

is just a string before the object (no blank-line inbetween); interpreted as markdown-string

can be attached to most things (functions, variables, modules, macros, types)

can be queried with ?

"Typical size of a beer crate"

const BEERBOX = 12?BEERBOXOne can add examples to doc-strings (they can even be part of testing: doc-tests).

(Run it in the REPL and copy paste to the docstring.)

"""

transform(r, θ)

Transform polar `(r,θ)` to cartesian coordinates `(x,y)`.

# Example

```jldoctest

julia> transform(4.5, pi/5)

(3.6405764746872635, 2.6450336353161292)

```

"""

transform(r, θ) = (r*cos(θ), r*sin(θ))?transformThe easiest way to write long-form documentation is to just use GitHub's markdown rendering.

A nice example is this short course by Ludovic (incidentally about solving PDEs on GPUs 🙂).

images are rendered

in-page links are easy, e.g. [_back to workshop material_](#workshop-material)

top-left has a burger-menu for page navigation

can be edited within the web-page (pencil-icon)

👉 this is a good and low-overhead way to produce pretty nice documentation

There are several tools which render .jl files (with special formatting) into markdown files. These files can then be added to GitHub and will be rendered there.

we're using Literate.jl

format is described here

files stay valid Julia scripts, i.e. they can be executed without Literate.jl

Example

input julia-code in: course-101-0250-00-L8Documentation.jl: scripts/car_travels.jl

output markdown in: course-101-0250-00-L8Documentation.jl: scripts/car_travels.md created with:

Literate.markdown("car_travels.jl", directory_of_this_file, execute=true, documenter=false, credit=false)But this is not automatic! Manual steps: run Literate, add files, commit and push...

or use GitHub Actions...

Demonstrated in the repo course-101-0250-00-L8Documentation.jl

name: Run Literate.jl

# adapted from https://lannonbr.com/blog/2019-12-09-git-commit-in-actions

on: push

jobs:

lit:

runs-on: ubuntu-latest

steps:

# Checkout the branch

- uses: actions/checkout@v4

- uses: julia-actions/setup-julia@latest

with:

version: '1.12'

arch: x64

- uses: julia-actions/cache@v1

- uses: julia-actions/julia-buildpkg@latest

- name: run Literate

run: QT_QPA_PLATFORM=offscreen julia --color=yes --project -e 'cd("scripts"); include("literate-script.jl")'

- name: setup git config

run: |

# setup the username and email. I tend to use 'GitHub Actions Bot' with no email by default

git config user.name "GitHub Actions Bot"

git config user.email "<>"

- name: commit

run: |

# Stage the file, commit and push

git add scripts/md/*

git commit -m "Commit markdown files fom Literate"

git push origin masterIf you want to have full-blown documentation, including, e.g., automatic API documentation generation, versioning, then use Documenter.jl.

Examples:

Notes:

it's geared towards Julia-packages, less for a bunch-of-scripts as in our lecture

Documenter.jl also integrates with Literate.jl.

for more free-form websites, use https://github.com/tlienart/Franklin.jl (as the course website does)

if you want to use it, it's easiest to generate your package with PkgTemplates.jl which will generate the Documenter-setup for you.

we don't use it in this course

👉 See Logistics for submission details.

The goal of this exercise is to:

Familiarise with distributed computing

Combine ImplicitGlobalGrid.jl and ParallelStencil.jl

Learn about GPU MPI on the way

In this exercise, you will:

Create a multi-xPU version of your the 2D xPU diffusion solver

Keep it xPU compatible using ParallelStencil.jl

Deploy it on multiple xPUs using ImplicitGlobalGrid.jl

Start by fetching the l9_diffusion_2D_perf_xpu.jl code from the scripts/l9_scripts folder and copy it to your lecture_10 folder.

Make a copy and rename it diffusion_2D_perf_multixpu.jl.

Follow the steps listed in the section from lecture 10 about using ImplicitGlobalGrid.jl to add multi-xPU support to the 2D diffusion code.

The 5 steps you'll need to implement are summarised hereafter:

Initialise the implicit global grid

Use global coordinates to compute the initial condition

Update halo (and overlap communication with computation)

Finalise the global grid

Tune visualisation

Once the above steps are implemented, head to daint.alps and configure either an salloc or prepare a sbatch script to access 1 node.

Run the single xPU l9_diffusion_2D_perf_xpu.jl code on a single CPU and single GPU (changing the USE_GPU flag accordingly) for following parameters

# Physics

Lx, Ly = 10.0, 10.0

D = 1.0

ttot = 1.0

# Numerics

nx, ny = 126, 126

nout = 20and save output C data. Confirm that the difference between CPU and GPU implementation is negligible, reporting it in a new section of the README.md for this exercise 2 within the lecture_10 folder in your shared private GitHub repo.

Then run the newly created diffusion_2D_perf_multixpu.jl script with following parameters on 4 MPI processes having set USE_GPU = true:

# Physics

Lx, Ly = 10.0, 10.0

D = 1.0

ttot = 1e0

# Numerics

nx, ny = 64, 64 # number of grid points

nout = 20

# Derived numerics

me, dims = init_global_grid(nx, ny, 1; select_device = false) # Initialization of MPI and more...Save the global C_v output array. Ensure its size matches the inner points of the single xPU produced output (C[2:end-1,2:end-1]) and then compare the results to the existing 2 outputs produced in Task 2

Now that we are confident the xPU and multi-xPU codes produce correct physical output, we will asses performance.

Use the code diffusion_2D_perf_multixpu.jl and make sure to deactivate visualisation, saving or any other operation that would save to disk or slow the code down.

Strong scaling: Using a single GPU, gather the effective memory throughput T_eff varying nx, ny as following

nx = ny = 16 * 2 .^ (1:10)ttot or nt accordingly.In a new figure you'll add to the README.md, report T_eff as function of nx, and include a short comment on what you see.

Weak scaling: Select the smallest nx,ny values from previous step (2.) for which you've gotten the best T_eff. Run now the same code using this optimal local resolution varying the number of MPI process as following np = 1,4,16,25,64.

In a new figure, report the execution time for the various runs normalising them with the execution time of the single process run. Comment in one sentence on what you see.

Finally, let's assess the impact of hiding communication behind computation achieved using the @hide_communication macro in the multi-xPU code.

Using the 64 MPI processes configuration, run the multi-xPU code changing the values of the tuple after @hide_communication such that

@hide_communication (2,2)

@hide_communication (16,4)

@hide_communication (16,16)Then, you should also run once the code commenting both @hide_communication and corresponding end statements. On a figure report the execution time as function of [no-hidecomm, (2,2), (8,2), (16,4), (16,16)] (note that the (8,2) case you should have from Task 4 and/or 5) making sure to normalise it by the single process execution time (from Task 5). Add a short comment related to your results.